1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

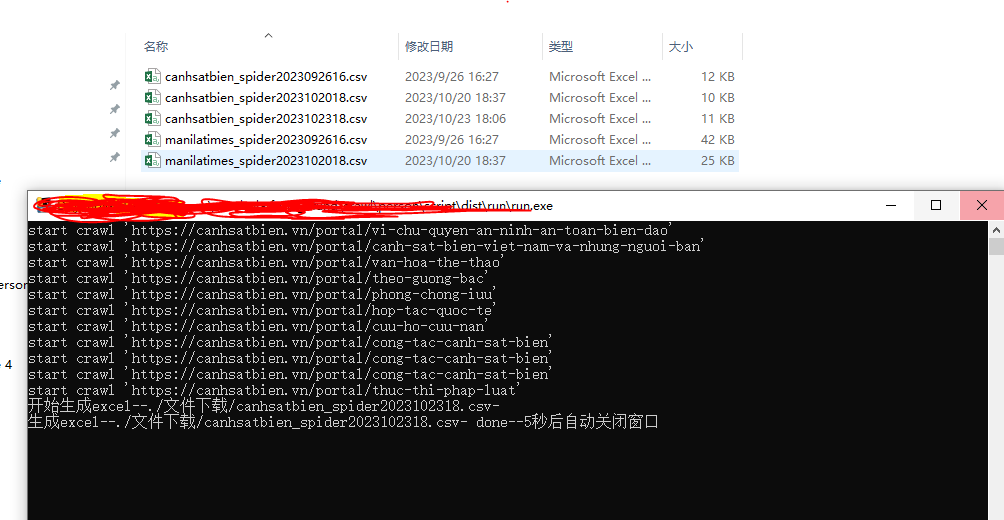

| import requests

from lxml import etree

from utils.format_date import get_current_date

import time

import csv

from utils.mkdir_folder import mkdir

import re

import urllib3

urllib3.disable_warnings(urllib3.exceptions.InsecureRequestWarning)

def write_csv_header(file_name, headers, encoding="gb18030"):

"""

写入csv header 头部数据

headers = ['ANN_DATE', 'FILE_NAME', 'FILE_URL']

"""

with open(f'{file_name}', 'a+', newline='', encoding=encoding) as f:

f_csv = csv.DictWriter(f, headers)

f_csv.writeheader()

def write_csv(file_name, rows, headers, encoding="gb18030"):

"""

写入csv

rows = []

s = {'ANN_DATE': i[8], 'FILE_NAME': i[9], 'FILE_URL': i[4]}

rows.append(s)

"""

with open(f'./{file_name}', 'a+', newline='', encoding=encoding) as f:

f_csv = csv.DictWriter(f, headers)

f_csv.writerows(rows)

headers = {

"user-agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/116.0.0.0 Safari/537.36"

}

cookies = {

}

item_list = []

def get_data(ids="russia", page=1):

url = "https://www.manilatimes.net/search"

params = {

"query": ids,

"pgno": page

}

exit_flag = False

for limit_count in range(5):

try:

response = requests.get(url, headers=headers, cookies=cookies, params=params, verify=False)

except Exception as err:

print(f"=====error====={err}")

continue

else:

print(f"start crawl '{response.url}'")

html = etree.HTML(response.content.decode("utf-8"))

time_dict = {'January': '01',

'February': '02', 'March': '03', 'April': '04', 'May': '05', 'June': '06', 'July': '07',

'August': '08',

'September': '09', 'October': '10', 'November': '11', 'December': '12'}

data_list = html.xpath('//div[@class="item-row-2 flex-row flex-between"]/div')

for i in data_list:

item = dict()

item["title"] = "".join(i.xpath('./div/a/@title'))

item["url"] = "".join(i.xpath('./div/a/@href'))

publish_time = "".join(i.xpath('./div/div[@class="col-content-1"]/div[2]/span/text()')).replace("-",

"").strip()

r = re.search(r'/(\d{4}/\d{2}/\d{2})/', item["url"], re.S | re.M)

if r:

publish_time = r.group(1).replace("/", "-")

item["publish_time"] = publish_time

current_month = get_current_date(date_format='%Y-%m')

if current_month == item["publish_time"][0:7]:

item_list.append(item)

else:

exit_flag = True

continue

if not exit_flag:

get_data(ids, page=page + 1)

break

def run_spider():

mkdir("./文件下载")

web_name = "manilatimes_spider"

ids = "russia"

get_data(ids, page=1)

mkdir("./文件下载")

file_name = f"./文件下载/{web_name}{get_current_date(date_format='%Y%m%d%H')}.csv"

print(f"开始生成excel--{file_name}-")

headers2 = ['title', 'url', 'publish_time']

write_csv_header(file_name, headers2, encoding='utf-8-sig')

write_csv(file_name, item_list, headers2, encoding='utf-8-sig')

print(f"生成excel--{file_name}- done--5秒后自动关闭窗口")

time.sleep(5)

if __name__ == '__main__':

run_spider()

|